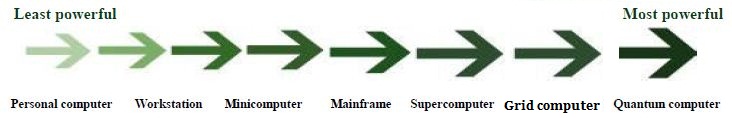

The term hardware refers to the physical components of your computer such as the system unit, mouse, keyboard, monitor,printer,scanner, etc.in this category i want to discuss about hardware of 7 types of computers that work differently from least powerful computing until most powerful computing.

.

History of Computer Hardware

The history of computing hardware is the record of the ongoing effort to make computer hardware faster, cheaper, and capable of storing more data.

Computing hardware evolved from machines that needed separate manual action to perform each arithmetic operation, to punched card machines, and then to stored-program computers. The history of stored-program computers relates first to computer architecture, that is, the organization of the units to perform input and output, to store data and to operate as an integrated mechanism (see block diagram to the right). Secondly, this is a history of the electronic components and mechanical devices that comprise these units. Finally, we describe the continuing integration of 21st-century supercomputers, networks, personal devices, and integrated computers/communicators into many aspects of today’s society. Increases in speed and memory capacity, and decreases in cost and size in relation to compute power, are major features of the history. As all computers rely on digital storage, and tend to be limited by the size and speed of memory, the history of computer data storage is tied to the development of computers.

.Computer Hardware Component

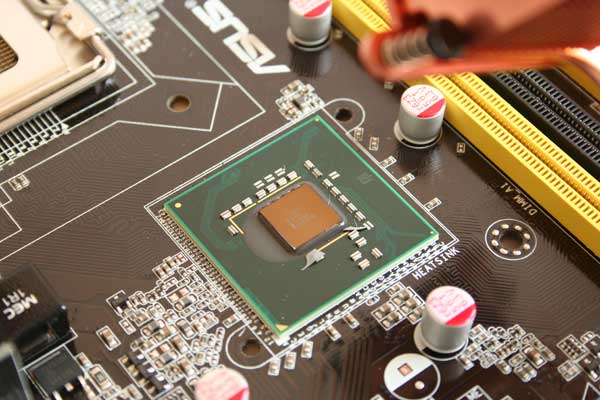

- Motherboard

- CPU

- VGA

- Sound card

- RAM

- H.D.D

- CD/DVD/Blue-ray ROM

- Monitor

- Printer

- Scanner

- Keyboard

- Mouse

- Power Supply

- Case

- NIC (Ethernet/Optical)

Computer Ports

- USB (Universal Serial Bus)

- PS/2

- Serial RS232/RS422/RS485

- SATA/RATA

- Fire wire

- Parallel

- DVI/VGA

- Ethernet

- HDMI

- SVIDEO

- PCMCIA

Computer Slots

- PCIe (Video card)

- PCI (Network,SCSI,Sound,Video card)

- AGP (Video card)

- ISA (Network,Sound,Video card)

- EISA (Network card,Video card,SCSI)

- AMR (Modem,Sound card)

- VESA (Video card)

A workstation is a high-end microcomputer designed for technical or scientific applications. Intended primarily to be used by one person at a time, they are commonly connected to a local area network and run multi-user operating systems. The term workstation has also been used to refer to amainframe computer terminal or a PC connected to a network.

Historically, workstations had offered higher performance than desktop computers, especially with respect to CPU and graphics, memory capacity, and multitasking capability. They are optimized for the visualization and manipulation of different types of complex data such as 3D mechanical design, engineering simulation (e.g. computational fluid dynamics), animation and rendering of images, and mathematical plots. Consoles consist of a high resolution display, a keyboard and a mouse at a minimum, but also offer multiple displays, graphics tablets, 3D mice (devices for manipulating 3D objects and navigating scenes), etc. Workstations are the first segment of the computer market to present advanced accessories and collaboration tools.

Presently, the workstation market is highly commoditized and is dominated by large PC vendors, such as Dell and HP, selling Microsoft Windows/Linux running on Intel Xeon/AMD Opteron. Alternative UNIX based platforms are provided by Apple Inc.

Minicomputer (colloquially, mini) is a term for class of smaller computers that evolved in the mid 1960s and sold for much less than mainframe and mid-size computers from IBM and its direct competitors. In a 1970 survey, the New York Times suggested a consensus definition of a minicomputer as a machine costing less than $25,000, with an input-output device such as a teleprinter and at least 4K words of memory, that is capable of running programs in a higher level language such as FORTRAN or Basic. The class formed a distinct group with its own hardware architectures and operating systems.

When single chip CPUs appeared, beginning with the Intel 4004 in 1971, the term minicomputer came to mean a machine that lies in the middle range of the computing spectrum, in between the smallest mainframe computers and the microcomputers. The term minicomputer is little used today; the contemporary term for this class of system is midrange computer, such as the higher-end SPARC, POWER and Itanium-based systems from Oracle, IBM and Hewlett-Packard.

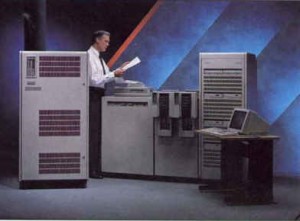

Mainframe computers (colloquially referred to as “big iron”) are powerful computers used primarily by corporate and governmental organizations for critical applications, bulk data processing such as census, industry and consumer statistics, enterprise resource planning, andtransaction processing. The term originally referred to the large cabinets that housed the central processing unit and main memory of early computers. Later, the term was used to distinguish high-end commercial machines from less powerful units. Most large-scale computer system architectures were established in the 1960s, but continue to evolve.

Most modern mainframe design is not so much defined by single task computational speed, typically defined as MIPS rate or FLOPS in the case of floating point calculations, as much as by their redundant internal engineering and resulting high reliability and security, extensive input-output facilities, strict backward compatibility with older software, and high hardware and computational utilization rates to support massive throughput. These machines often run for long periods of time without interruption, given their inherent high stability and reliability.

Software upgrades usually require resetting the operating system or portions thereof, and are non-disruptive only when using virtualizing facilities such as IBM’s Z/OS and Parallel Sysplex, or Unisys’ XPCL, which support workload sharing so that one system can take over another’s application while it is being refreshed. Mainframes are defined by high availability, one of the main reasons for their longevity, since they are typically used in applications where downtime would be costly or catastrophic. The term reliability, availability and serviceability (RAS) is a defining characteristic of mainframe computers. Proper planning and implementation is required to exploit these features, and if improperly implemented, may serve to inhibit the benefits provided. In addition, mainframes are more secure than other computer types. The NIST National Institute of Standards and Technology vulnerabilities database, US-CERT, rates traditional mainframes such as IBM zSeries, Unisys Dorado and Unisys Libra as among the most secure with vulnerabilities in the low single digits as compared with thousands for Windows, Linux and Unix.

In the 1960s, most mainframes had no explicitly interactive interface. They accepted sets of punched cards, paper tape, and/or magnetic tape and operated solely in batch mode to support back officefunctions, such as customer billing. Teletype devices were also common, for system operators, in implementing programming techniques. By the early 1970s, many mainframes acquired interactive user interfaces and operated as timesharing computers, supporting hundreds of users simultaneously along with batch processing. Users gained access through specialized terminals or, later, frompersonal computers equipped with terminal emulation software. By the 1980s, many mainframes supported graphical terminals, and terminal emulation, but not graphical user interfaces. This format of end-user computing reached mainstream obsolescence in the 1990s due to the advent of personal computers provided with GUIs. After 2000, most modern mainframes have partially or entirely phased out classic terminal access for end-users in favour of Web user interfaces.

Historically, mainframes acquired their name in part because of their substantial size, and because of requirements for specialized heating, ventilation, and air conditioning (HVAC), and electrical power, essentially posing a “main framework” of dedicated infrastructure. The requirements of high-infrastructure design were drastically reduced during the mid-1990s with CMOS mainframe designs replacing the older bipolar technology. IBM claimed that its newer mainframes can reduce data center energy costs for power and cooling, and that they could reduce physical space requirements compared to server farms.

A supercomputer is a computer at the frontline of current processing capacity, particularly speed of calculation. Supercomputers were introduced in the 1960s and were designed primarily by Seymour Cray at Control Data Corporation (CDC), and later at Cray Research. While the supercomputers of the 1970s used only a few processors, in the 1990s, machines with thousands of processors began to appear and by the end of the 20th century, massively parallel supercomputers with tens of thousands of “off-the-shelf” processors were the norm.

Systems with a massive number of processors generally take one of two paths: in one approach, e.g. in grid computing the processing power of a large number of computers in distributed, diverse administrative domains, is opportunistically used whenever a computer is available. In another approach, a large number of processors are used in close proximity to each other, e.g. in a computer cluster. The use of multi-core processors combined with centralization is an emerging direction.Currently, Japan’s K computer (a cluster) is the fastest in the world.

Supercomputers are used for highly calculation-intensive tasks such as problems including quantum physics, weather forecasting, climate research, oil and gas exploration, molecular modeling (computing the structures and properties of chemical compounds, biologicalmacromolecules, polymers, and crystals), and physical simulations (such as simulation of airplanes in wind tunnels, simulation of the detonation of nuclear weapons, and research into nuclear fusion).

Grid computing is a term referring to the federation of computer resources from multiple administrative domains to reach a common goal. The grid can be thought of as a distributed system with non-interactive workloads that involve a large number of files. What distinguishes grid computing from conventional high performance computing systems such as cluster computing is that grids tend to be more loosely coupled, heterogeneous, and geographically dispersed. Although a grid can be dedicated to a specialized application, it is more common that a single grid will be used for a variety of different purposes. Grids are often constructed with the aid of general-purpose grid software libraries known as middleware.

Grid size can vary by a considerable amount. Grids are a form of distributed computing whereby a “super virtual computer” is composed of many networked loosely coupled computers acting together to perform very large tasks. For certain applications, “distributed” or “grid” computing, can be seen as a special type of parallel computing that relies on complete computers (with onboard CPUs, storage, power supplies, network interfaces, etc.) connected to a network (private, public or the Internet) by a conventional network interface, such as Ethernet. This is in contrast to the traditional notion of a supercomputer, which has many processors connected by a local high-speed computer bus.

Grid computing combines computers from multiple administrative domains to reach a common goal, to solve a single task, and may then disappear just as quickly.

One of the main strategies of grid computing is to use middleware to divide and apportion pieces of a program among several computers, sometimes up to many thousands. Grid computing involves computation in a distributed fashion, which may also involve the aggregation of large-scale cluster computing-based systems.

The size of a grid may vary from small—confined to a network of computer workstations within a corporation, for example—to large, public collaborations across many companies and networks. “The notion of a confined grid may also be known as an intra-nodes cooperation whilst the notion of a larger, wider grid may thus refer to an inter-nodes cooperation”.

Grids are a form of distributed computing whereby a “super virtual computer” is composed of many networked loosely coupled computers acting together to perform very large tasks. This technology has been applied to computationally intensive scientific, mathematical, and academic problems through volunteer computing, and it is used in commercial enterprises for such diverse applications asdrug discovery, economic forecasting, seismic analysis, and back office data processing in support for e-commerce and Web services.

Coordinating applications on Grids can be a complex task, especially when coordinating the flow of information across distributed computing resources. Grid workflow systems have been developed as a specialized form of a workflow management system designed specifically to compose and execute a series of computational or data manipulation steps, or a workflow, in the Grid context.

A quantum computer is a device for computation that makes direct use of quantum mechanical phenomena, such as superposition and entanglement, to perform operations on data. Quantum computers are different from digital computers based on transistors. Whereas digital computers require data to be encoded into binary digits (bits), quantum computation utilizes quantum properties to represent data and perform operations on these data.A theoretical model is the quantum Turing machine, also known as the universal quantum computer. Quantum computers share theoretical similarities with non-deterministic and probabilistic computers, like the ability to be in more than one state simultaneously. The field of quantum computing was first introduced by Richard Feynman in 1982.

Although quantum computing is still in its infancy, experiments have been carried out in which quantum computational operations were executed on a very small number of qubits (quantum bits). Both practical and theoretical research continues, and many national government and military funding agencies support quantum computing research to develop quantum computers for both civilian and national security purposes, such as cryptanalysis.

Large-scale quantum computers could be able to solve certain problems much faster than any classical computer by using the best currently known algorithms, like integer factorization using Shor’s algorithm or the simulation of quantum many-body systems. There exist quantum algorithms, such asSimon’s algorithm, which run faster than any possible probabilistic classical algorithm.

Given unlimited resources, a classical computer can simulate an arbitrary quantum algorithm so quantum computation does not violate the Church–Turing thesis. However, in practice infinite resources are never available and the computational basis of 500 qubits, for example, would already be too large to be represented on a classical computer because it would require 2500complex values to be stored.(For comparison, a terabyte of digital information stores only 243 discrete on/off values) Nielsen and Chuang point out that “Trying to store all these complex numbers would not be possible on any conceivable classical computer.